There’s a theory called ‘The Uncanny Valley’ regarding humans’ emotional response to human-like robots. From The Wikipedia entry:

The Uncanny Valley is a hypothesis about robotics concerning the emotional response of humans to robots and other non-human entities. It was introduced by Japanese roboticist Masahiro Mori in 1970 […]

Mori’s hypothesis states that as a robot is made more humanlike in its appearance and motion, the emotional response from a human being to the robot will become increasingly positive and empathic, until a point is reached beyond which the response quickly becomes strongly repulsive. However, as the appearance and motion continue to become less distinguishable from a human being’s, the emotional response becomes positive once more and approaches human-human empathy levels.

This area of repulsive response aroused by a robot with appearance and motion between a “barely-human” and “fully human” entity is called the Uncanny Valley. The name captures the idea that a robot which is “almost human” will seem overly “strange” to a human being and thus will fail to evoke the requisite empathetic response required for productive human-robot interaction.

While most of us don’t interact with human-like robots frequently enough to accept or reject this theory, many of us have seen a movie like The Polar Express or Final Fantasy: The Spirit Within, which use realistic – as opposed to cartoonish – computer-generated human characters. Although the filmmakers take great care to make the characters’ expressions and movements replicate those of real human actors, many viewers find these almost-but-not-quite-human characters to be unsettling or even creepy.

The problem is that our minds have a model of how humans should behave and the pseudo-humans, whether robotic or computer-generated images, don’t quite fit this model, producing a sense of unease – in other words, we know that something’s not right – even if we can’t precisely articulate what’s wrong.

Why don’t we feel a similar sense of unease when we watch a cartoon like The Simpsons, where the characters are even further away from our concept of humanness? Because in the cartoon environment, we accept that the characters are not really human at all – they’re cartoon characters and are self-consistent within their animated environment. Conversely, it would be jarring if a real human entered the frame and interacted with the Simpsons, because eighteen years of Simspons cartoons and eighty years of cartoons in general have conditioned us not to expect this [Footnote 1].

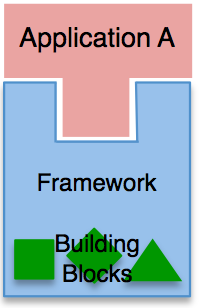

There’s a lesson here for software designers, and one that I’ve talked about recently – we must ensure that we design our applications to remain consistent with the environment in which our software runs. In more concrete terms: a Windows application should look and feel like a Windows application, a Mac application should look and feel like a Mac application, and a web application should look and feel like a web application.

Obvious, you say? I’d agree that software designers and developers generally observe this rule except in the midst of a technological paradigm shift. During periods of rapid innovation and exploration, it’s tempting and more acceptable to violate the expectations of a particular environment. I know this is a sweeping and abstract claim, so let me back it up with a few examples.

Does anyone remember Active Desktop? When Bill Gates realized that the web was a big deal, he directed all of Microsoft to web-enable all Microsoft software products. Active Desktop was a feature that made the Windows desktop look like a web page and allowed users to initiate the default action on a file or folder via a hyperlink-like single-click rather than the traditional double-click. One of the problems with Active Desktop was that it broke all of users expectations about interacting with files and folders. Changing from the double-click to single-click model subtley changed other interactions, like drag and drop, select, and rename. The only reason I remember this feature is because so many non-technical friends at Penn State asked me to help them turn it off.

Another game-changing technology of the 1990s was the Java platform. Java’s attraction was that the language’s syntax looked and felt a lot like C and C++ (which many programmers knew) but it was (in theory) ‘write once, run anywhere’ – in other words, multiplatform. Although Java took hold on the server-side, it never took off on the desktop as many predicted it would. Why didn’t it take off on the desktop? My own experience with using Java GUI apps of the late 1990s was that they were slow and they looked and behaved weirdly vs. standard Windows (or Mac or Linux) applications. That’s because they weren’t true Windows/Mac/Linux apps. They were Java Swing apps which emulated Windows/Mac/Linux apps. Despite the herculean efforts of the Swing designers and implementers, they couldn’t escape the Uncanny Valley of emulated user interfaces.

Eclipse and SWT took a different approach to Java-based desktop apps [Footnote 2]. Rather than emulating native desktop widgets, SWT favor direct delegation to native desktop widgets [Footnote 3], resulting in applications that look like Windows/Mac/Linux applications rather than Java Swing applications. The downside of this design decision is that SWT widget developers must manually port a new widget to each supported desktop environment. This development-time and maintenance pain point only serves to emphasize how important the Eclipse/SWT designers judged native look and feel to be.

Just like Windows/Mac/Linux apps have a native look and feel, so too do browser-based applications. The native widgets of the web are the standard HTML elements – hyperlinks, tables, buttons, text inputs, select boxes, and colored spans and divs. We’ve had the tools to create richer web applications ever since pre-standards DOMs and Javascript 1.0, but it’s only been the combination of DOM (semi-)standardization, XHR de-facto standardization, emerging libraries, and exemplary next-gen apps like Google Suggest and Gmail that have led to a non-trivial segment of the software community to attempt richer web UIs which I believe we’re now lumping under the banner of ‘Ajax’ (or is it ‘RIA’?). Like the web and Java before it, the availability of Ajax technology is causing some developers to diverge from the native look and feel of the web in favor of a user interface style I call “desktop app in a web browser”. For an example of this style of Ajax app, take a few minutes and view this Flash demo of the Zimbra collaboration suite.

To me, Zimbra doesn’t in any way resemble my mental model of a web application; it resembles Microsoft Outlook [Footnote 4]. On the other hand Gmail, which is also an Ajax-based email application, almost exactly matches my mental model of how a web application should look and feel (screenshots). Do I prefer the Gmail look and feel over the Zimbra look and feel? Yes. Why? Because over the past twelve years, my mind has developed a very specific model of how a web application should look and feel, and because Gmail aligns to this model, I can immediately use it and it feels natural to me. Gmail uses Ajax to accelerate common operations (e.g. email address auto-complete) and to enable data transfer sans jarring page refresh (e.g. refresh Inbox contents) but its core look and feel remains very similar to that of a traditional web page. In my view, this is not a shortcoming; it’s a smart design decision.

So I’d recommend that if you’re considering or actively building Ajax/RIA applications, you should consider the Uncanny Valley of user interface design and recognize that when you build a “desktop in the web browser”-style application, you’re violating users’ unwritten expectations of how a web application should look and behave. This choice may have significant negative impact on learnability, pleasantness of use, and adoption. The fact that you can create web applications that resemble desktop applications does not imply that you should; it only means that you have one more option and subsequent set of trade-offs to consider when making design decisions.

[Footnote 1] Who Framed Roger Rabbit is a notable exception.

[Footnote 2] I work for the IBM group (Eclipse/Jazz) that created SWT, so I may be biased.

[Footnote 3] Though SWT favors delegation to native platform widgets, it sometimes uses emulated widgets if the particular platform doesn’t provide an acceptable native widget. This helps it get around the ‘least-common denominator’ problem of AWT.

[Footnote 4] I’m being a bit unfair to Zimbra here because there’s a scenario where its Outlook-like L&F really shines. If I were a CIO looking to migrate off of Exchange/Outlook to a cheaper multiplatform alternative, Zimbra would be very attractive because since Zimbra is functionally consistent with Outlook, I’d expect that Outlook users could transition to Zimbra fairly quickly.